The promise of autonomous driving (AD)—vehicles capable of navigating public roads without human intervention—represents a monumental leap in transportation technology, offering the potential to drastically reduce traffic fatalities, alleviate congestion, and redefine personal mobility. However, before these systems can achieve widespread deployment, they must meet unprecedented safety and reliability standards.

The inherent complexity of delegating driving decisions to Artificial Intelligence (AI), combined with the infinite variability of real-world scenarios, means that AD safety verification and validation (V&V) is arguably the greatest engineering and regulatory challenge of the 21st century. The industry is currently engaged in a massive, multi-layered effort to test these systems, shattering previous safety benchmarks through a combination of rigorous simulations, controlled track testing, and extensive real-world road accumulation.

This in-depth analysis will dissect the multi-layered methodology used to verify the safety of autonomous vehicles (AVs), detail the critical technologies—especially the sensor suite and AI decision-making layer—that must be proven reliable, examine the global regulatory framework that struggles to keep pace with innovation, analyze the significant challenges of testing against “unknown unknowns,” and forecast the future of a highly regulated, yet fully autonomous, global transportation system.

Layered V&V Methodology: Proving Security at Scale

Because autonomous vehicle safety must be proven across billions of miles of unique scenarios, a single testing method is insufficient. The industry relies on a multi-layered verification strategy to achieve statistical confidence in safety.

1. Large-Scale Simulation Testing

Most of the test mileage required to prove safety is generated synthetically in a virtual environment, minimizing risk and maximizing efficiency.

- Virtual Scenario Generation: AI-powered simulation platforms create highly accurate digital twins of the real world, including detailed physics, weather conditions, lighting changes, and realistic behavior of other vehicles and pedestrians. This allows developers to test millions of scenarios that are too dangerous or too rare to encounter in the real world.

- Edge Case and Adversarial Testing: Simulation is the only practical way to repeatedly test edge cases—rare or extreme scenarios that are crucial to a system’s resilience (e.g., unusual objects on the road, flash floods). Furthermore, adversarial testing involves creating situations intentionally designed to frustrate the system, forcing the AI to confront and learn from its weaknesses.

- Hardware-in-the-Loop (HIL) and Software-in-the-Loop (SIL): These testing methods involve integrating actual physical components (HIL) or software code (SIL) into a virtual environment. HIL, for example, tests real sensor hardware against simulated inputs, verifying that the physical components function as expected before real-world deployment.

2. Controlled Environment Track Testing

Before full deployment on public roads, AVs undergo controlled and repeated testing at private proving grounds.

- Critical Scenario Replication: The test track is used to recreate specific high-risk scenarios identified in simulations or from real-world incidents (e.g., sudden lane changes, complex unguarded left turns, collision mitigation maneuvers). The controlled nature of the track ensures precise repeatability under identical conditions.

- Sensor Calibration and Validation: This controlled setting is essential for the initial calibration and validation of the entire sensor suite—ensuring that the camera, LiDAR, and radar systems are accurately integrated and that their outputs precisely match known physical parameters.

- Certification Testing: The track is used to conduct standard regulatory compliance testing, such as braking performance testing, evasive maneuver verification, and initial verification of the vehicle’s ability to execute Minimal Risk Conditions (MRC), where the vehicle safely pulls over in the event of a system failure.

3. Public Road Data Accumulation

The final layer involves the accumulation of real-world experience, which is necessary to capture the nuances and “unknowns” of human behavior.

- Safe Driver Supervision: Initial trials on public roads require a safe driver to monitor the system and be ready to take manual control (disable) immediately if the AV system fails to function safely. The frequency and context of these disablings are important public safety metrics.

- Real-World Data Harvesting: Every mile driven on public roads constitutes data harvesting. Vehicles record all sensor data, AI decisions, and human interventions. This vast repository of real-world data is used to retrain and improve AI models, directly addressing performance gaps found in the field.

- Shadow Mode Testing: Vehicles can operate in shadow mode (or passive mode) where the AV system runs in the background, making driving decisions but not physically controlling the vehicle. The system’s decisions are then compared with the actions of actual human drivers to identify and correct potential errors without risk.

Critical Technology Verification: Sensors and AI

The safety of autonomous vehicles depends entirely on the reliability of the perception layer (sensors) and the decision layer (AI software). Rigorous testing must prove that both components are flawless.

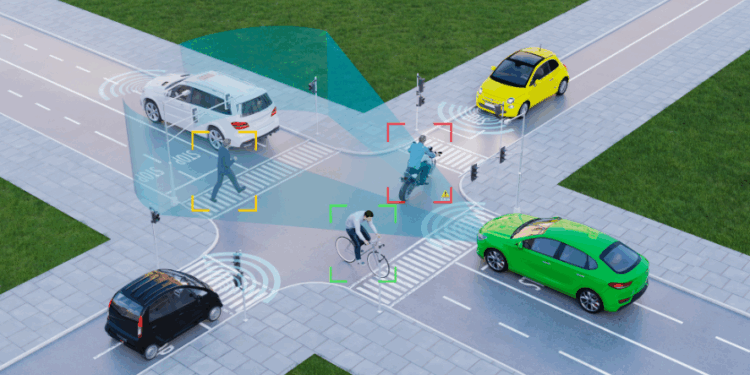

1. Sensor Redundancy and Fusion

Vehicles must be able to accurately perceive their environment under all conditions, requiring redundant and varied sensor technology.

- LiDAR (Light Detection and Ranging): LiDAR is tested for its ability to generate highly accurate three-dimensional point clouds, regardless of lighting conditions. Testing focuses on reliability in adverse weather (fog, heavy rain, snow) where laser light is scattered.

- Camera Vision System: Cameras play a critical role in reading signs and traffic lights, as well as identifying the color and texture of objects. Testing must verify the system’s performance against varying light contrasts, sun glare, and fast-moving objects, ensuring the AI can correctly interpret visual information.

- Sensor Fusion Integrity: The most critical test is sensor fusion—the system’s ability to seamlessly and accurately combine data from all sensors (LiDAR, radar, cameras) into a single, cohesive, and reliable world model. Redundancy ensures that if one sensor fails (e.g., a camera is blocked by mud), the other sensors can compensate instantly.

2. Verification of the AI Decision Stack

Proving the safety of the software—the brain of the AV—is the most complex aspect of V&V.

- Compliance with Operational Design Domain (ODD): Each AV is designed to operate safely only within a specific ODD (e.g., specific geographical area, weather conditions, road types). Testing must rigorously verify the system’s ability to recognize when it is operating outside its ODD and initiate a safe fallback (MRC).

- Prediction of Human Behavior: The AV must safely interact with unpredictable human drivers and pedestrians. The AI’s ability to correctly predict the likely trajectories and intentions of surrounding agents is constantly tested and refined, focusing on scenarios where human intent is ambiguous.

- Safety Case Documentation: Manufacturers must produce a comprehensive Safety Case—detailed, auditable documentation that proves the AV system is acceptably safe under all relevant conditions. This legal and technical record becomes the basis for regulatory approval.

Regulatory Frameworks: The Global Challenge

Governments worldwide are struggling to create a regulatory environment that ensures public safety without stifling rapid technological advancement.

1. Standardization and Classification (SAE Levels)

International bodies are working to standardize the language and testing requirements for autonomous vehicles.

- SAE J3016 Classification: The industry relies on the SAE International J3016 standard, which defines six levels of driving automation (Level 0 to Level 5). Regulatory testing is tailored to these levels, with Level 3 (Conditional Automation) requiring the greatest scrutiny on the transition of control back to the human driver.

- ISO 26262 and ISO/PAS 21448: These standards provide frameworks for Functional Safety (ISO 26262), ensuring the electronic systems do not fail hazardously, and Safety of the Intended Functionality (SOTIF – ISO/PAS 21448), ensuring the system doesn’t cause unreasonable risk even if the components are working correctly (e.g., misinterpreting a shadow as an object).

- Cross-Border Harmonization: Major economic blocs (EU, US, China) are working toward harmonizing testing standards and vehicle certification requirements to allow AVs to operate globally, minimizing regulatory barriers to deployment.

2. The Dilemma of Metrics

Regulators face a fundamental problem: how much data is enough to prove safety?

- Mileage vs. Scenario-Based Testing: Traditional regulators relied on accumulated miles (millions). However, modern thinking shifts toward scenario-based testing, focusing on proving competence in a finite set of critical, high-risk scenarios rather than just general driving time.

- Safety Metrics: New regulatory metrics are emerging, such as measuring the system’s capacity to maintain a minimum safe distance from other objects (safety distance metrics) and the frequency and context of required disengagements per thousand miles driven.

- Accountability and Liability: Legal frameworks are grappling with determining liability in the event of an AV-caused accident. The Safety Case documentation and proof of rigorous V&V testing become the central elements in establishing accountability.

The Statistical and Ethical Quagmire

The Statistical and Ethical Quagmire

The final, and most profound, challenges in proving AV safety are deeply rooted in statistics, philosophy, and ethics.

1. Testing the “Unknown Unknowns”

The greatest risk is the scenario that the developers have not yet conceived or tested.

- The Rare Event Problem: To statistically prove an AV is safer than a human driver (who has a typical fatality rate), the AV would need to be tested for hundreds of millions or even billions of miles without incident. Generating this volume of real-world data is physically and temporally impossible.

- Accelerated Testing and Falsification: To bridge this gap, the industry employs accelerated testing methodologies, deliberately increasing the frequency of critical scenarios in simulation and track environments. The goal is to falsify the system—to actively prove it can fail—so that the flaw can be found and corrected.

- The Adversarial Environment: The system must be robust enough to handle malicious and unforeseen interventions (e.g., a child throwing a unique object onto the road, a coordinated cyber-attack). Testing must include strategies to ensure the system is not vulnerable to engineered perceptual failures.

2. The Ethics of AI Decision-Making

Beyond technical competence, the AV’s decision process in unavoidable collision scenarios presents an ethical challenge.

- The Trolley Problem in Practice: While often oversimplified, ethical testing addresses scenarios where a collision is inevitable and the AI must choose between outcomes that minimize harm (e.g., swerving to hit a barrier or another vehicle). Developers must explicitly code these ethical trade-offs, which requires community consensus.

- Bias in Training Data: If the training data used to teach the AI is not diverse (e.g., underrepresenting certain demographics or lighting conditions), the final system may exhibit perceptual biases, leading to unequal safety outcomes for different groups. V&V must rigorously test for and eliminate these biases.

- Transparency and Explainability (XAI): Safety-critical systems require Explainable AI (XAI). This means the system must be able to document and articulate the rationale behind its decisions (e.g., “I initiated braking because the integrated sensor model predicted a pedestrian crossing with 95% confidence”). This explainability is crucial for auditability and trustworthiness.

Conclusion

Conclusion

Autonomous driving safety verification and validation is a monumental and ongoing endeavor that has challenged traditional vehicle testing concepts. This verification and validation goes far beyond simple crash tests, relying instead on billions of miles of simulation, rigorous sensor fusion validation, and the development of auditable Safety Cases based on global standards like SOTIF.

The current record-breaking pace of autonomous vehicle development is matched by an equally intense effort to solve the “known unknowns” through accelerated scenario testing and the integration of deep ethical considerations into the core logic of AI. The future of autonomous mobility depends on the industry’s ability to maintain a transparent, data-driven, and consistently safe V&V approach, ensuring the convenience and efficiency of autonomous vehicles are realized without compromising public trust or safety.